Difference between revisions of "IC Python API:Audio Visualizer"

Chuck (RL) (Talk | contribs) m (→Plotting the Signals) |

Chuck (RL) (Talk | contribs) m |

||

| (3 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{TOC}} | {{TOC}} | ||

| − | {{Parent|IC_Python_API | + | {{Parent|IC_Python_API#Python_of_the_Month|Python of the Month}} |

== Required Modules == | == Required Modules == | ||

| Line 21: | Line 21: | ||

== User Interface == | == User Interface == | ||

| − | For the sake of convenience, we'll be reading from a QT UI file. You can download '''Audio_Visualizer.ui''' [[ | + | For the sake of convenience, we'll be reading from a QT UI file. You can download '''Audio_Visualizer.ui''' [[Media:Audio_Visualizer.ui|here]]. |

<syntaxhighlight lang="python"> | <syntaxhighlight lang="python"> | ||

| Line 62: | Line 62: | ||

== Plotting the Signals == | == Plotting the Signals == | ||

| − | We'll be using Qt's '''QGraphicsScene''' and '''QGraphicsView''' to plot out the sound waveform. '''QGraphicsView''' is a widget that is embedded inside the .ui file. We'll be doing a simple mapping of 1:1 frequency ratio of 1 pixel to 1 hertz. So, for example, if the audio is 44,100 hertz that means we'll be plotting 44,100 lines per second of the track. Therefore, the timeline is extremely zoomed in at 5 millisecond (0.005 sec) intervals. We'll map the amplitude to a signed normalized | + | We'll be using Qt's '''QGraphicsScene''' and '''QGraphicsView''' to plot out the sound waveform. '''QGraphicsView''' is a widget that is embedded inside the .ui file. We'll be doing a simple mapping of 1:1 frequency ratio of 1 pixel to 1 hertz. So, for example, if the audio is 44,100 hertz that means we'll be plotting 44,100 lines per second of the track. Therefore, the timeline is extremely zoomed in at 5 millisecond (0.005 sec) intervals. We'll map the amplitude to a signed normalized -1 and 1 scale of the audio file bit-depth. Amplitude can be negative because it represents the max compression and max rarefaction of the recorded sound pressure. |

On a side note, range of audible frequencies for humans is 20 to 20,000 Hz. Modern sound file formats are more than adequate to represent this range. | On a side note, range of audible frequencies for humans is 20 to 20,000 Hz. Modern sound file formats are more than adequate to represent this range. | ||

Latest revision as of 19:39, 18 October 2020

- Main article: Python of the Month.

Required Modules

Besides the rudimentary Reallusion Python API we'll need the following modules:

- os for reading from the Windows file system.

- numpy for transforming arrays.

- wave for reading wav file data.

- Pyside2 for building the user interface.

import RLPy

import os

import numpy as np

import wave

from PySide2 import *

from PySide2.shiboken2 import wrapInstance

User Interface

For the sake of convenience, we'll be reading from a QT UI file. You can download Audio_Visualizer.ui here.

window = RLPy.RUi.CreateRDockWidget()

window.SetWindowTitle("Audio Visualizer (Mono)")

window.SetAllowedAreas(RLPy.EDockWidgetAreas_BottomDockWidgetArea)

dock = wrapInstance(int(window.GetWindow()), QtWidgets.QDockWidget)

dock.resize(1000, 300)

ui = QtCore.QFile(os.path.dirname(__file__) + "/Audio_Visualizer.ui")

ui.open(QtCore.QFile.ReadOnly)

widget = QtUiTools.QUiLoader().load(ui)

ui.close()

widget.progressBar.setVisible(False)

dock.setWidget(widget)

Audio Bit-Depth

Audio files just like images can be save with certain bit-depths depending on the fidelity of the audio to the original sound quality. Just like images, audio files with higher bit-depths have better "accuracy" and are more faithful to the recorded source audio.

Without installing and relying on the soundfile module, we can guess the bit-depth from the maximum signal value of an audio file. For example, if the highest signal magnitude (signed) is 32,767 then it is definitely within the range of 8bit (256) and 16bit (65,536); So it's safe to assume that the audio is 16 bit.

We can use the following function to tease out the bit-depth:

def guess_bit_depth(value):

bits = [2**8., 2**16., 2**24., 2**32.]

bits.append(value)

bits.sort()

bit_depth = bits[bits.index(value)+1]

return bit_depth

Again, this is a crude implementation, for more robust method you should use the soundfile module and read the audio sub-type attribute.

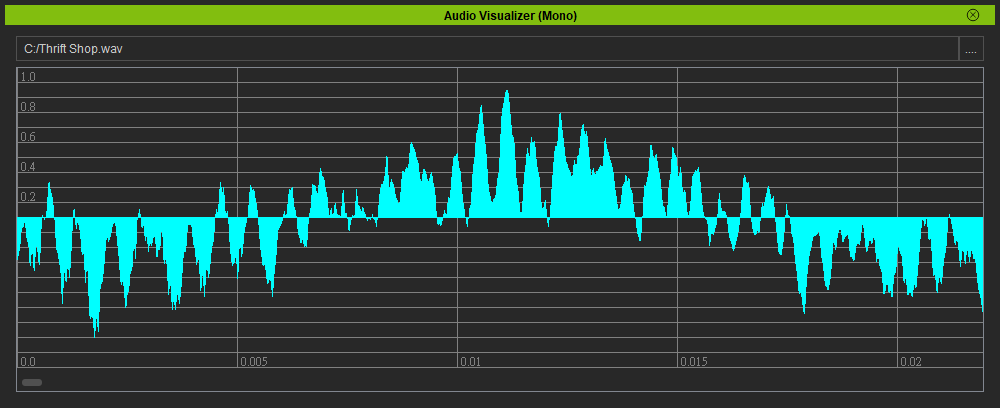

Plotting the Signals

We'll be using Qt's QGraphicsScene and QGraphicsView to plot out the sound waveform. QGraphicsView is a widget that is embedded inside the .ui file. We'll be doing a simple mapping of 1:1 frequency ratio of 1 pixel to 1 hertz. So, for example, if the audio is 44,100 hertz that means we'll be plotting 44,100 lines per second of the track. Therefore, the timeline is extremely zoomed in at 5 millisecond (0.005 sec) intervals. We'll map the amplitude to a signed normalized -1 and 1 scale of the audio file bit-depth. Amplitude can be negative because it represents the max compression and max rarefaction of the recorded sound pressure.

On a side note, range of audible frequencies for humans is 20 to 20,000 Hz. Modern sound file formats are more than adequate to represent this range.

def plot_signal(framerate, signal):

widget.progressBar.setVisible(True)

blue_pen = QtGui.QPen(QtGui.QColor(0, 255, 255))

grey_pen = QtGui.QPen(QtGui.QColor(128, 128, 128))

scene = QtWidgets.QGraphicsScene()

bit_depth = guess_bit_depth(max(signal))

factor = 150 / bit_depth * -2

widget.progressBar.setRange(0, len(signal))

ticks = 0

interval = int(framerate * 0.005) # 5 millisecond interval

for i in range(1, 11): # Draw horizontal lines for frequency ratio

scene.addLine(0, i * 15, len(signal), i*15, grey_pen)

scene.addLine(0, -i * 15, len(signal), -i*15, grey_pen)

if i % 2 == 0:

text = scene.addText(str(round(i * 0.1, 1)))

text.setDefaultTextColor(QtGui.QColor(128, 128, 128))

text.setPos(0, i * -15)

for i in range(len(signal)):

if i == ticks * interval:

text = scene.addText(str(round(ticks * 0.005, 3)))

text.setDefaultTextColor(QtGui.QColor(128, 128, 128))

text.setPos(i, 135)

# Add vertical lines for time value

scene.addLine(i, -150, i, 150, grey_pen)

ticks += 1

scene.addLine(i, 0, i, signal[i] * factor, blue_pen)

widget.progressBar.setValue(i)

widget.progressBar.setVisible(False)

widget.graphicsView.setScene(scene)

Reading the Audio File

Processing the audio file into data we can use is complicated as there are some pitfalls you must be aware of:

- Wave data read is in byte format which must be converted to decimal values to be comprehensible.

- Stereo channel audio files are oftentimes interleaved. That means the left and right channels are mixed into one single mono track.

- In order to derive the waveform for stereo channel audio, we must take the highest amplitude of either channel and merge it into a mono track.

def open_audio_file():

file_path = RLPy.RUi.OpenFileDialog("Wave Files(*.wav)")

if file_path is not "":

widget.path.setText(file_path)

wav = wave.open(file_path, 'rb')

nchannels, sampwidth, framerate, nframes, comptype, compname = wav.getparams()

str_data = wav.readframes(nframes)

# Convert bytes to decimals

wav_data = np.fromstring(str_data, dtype=np.short)

if nchannels == 2:

# Stereo sounds are two mono tracks interleaved so we need to:

# Separate the signals into 2 channels for left & right as tuples (indefinite rows and 2 columns)

wav_data.shape = -1, 2

# Transpose the 2 arrays into a single array of left & right channels: 0[l][r], 1[l][r], 2[l][r]

wav_data = wav_data.T

# Put the highest amplitude in wav_data[0], equivalent to merging stereo to mono

for i in range(len(wav_data[0])):

if wav_data[0][i] < wav_data[1][i]:

wav_data[0][i] = wav_data[1][i]

# Select only 1 channel, now with the highest amplitude

wav_data = wav_data[0]

plot_signal(framerate, wav_data)

widget.button.clicked.connect(open_audio_file)

window.Show()

Everything Put Together

You can copy and paste the following code into a PY file and load it into iClone via Script > Load Python.

import RLPy

import os

import numpy as np

import wave

from PySide2 import *

from PySide2.shiboken2 import wrapInstance

window = RLPy.RUi.CreateRDockWidget()

window.SetWindowTitle("Audio Visualizer (Mono)")

window.SetAllowedAreas(RLPy.EDockWidgetAreas_BottomDockWidgetArea)

dock = wrapInstance(int(window.GetWindow()), QtWidgets.QDockWidget)

dock.resize(1000, 300)

ui = QtCore.QFile(os.path.dirname(__file__) + "/Audio_Visualizer.ui")

ui.open(QtCore.QFile.ReadOnly)

widget = QtUiTools.QUiLoader().load(ui)

ui.close()

widget.progressBar.setVisible(False)

dock.setWidget(widget)

def guess_bit_depth(value):

bits = [2**8., 2**16., 2**24., 2**32.]

bits.append(value)

bits.sort()

bit_depth = bits[bits.index(value)+1]

return bit_depth

def plot_signal(framerate, signal):

widget.progressBar.setVisible(True)

blue_pen = QtGui.QPen(QtGui.QColor(0, 255, 255))

grey_pen = QtGui.QPen(QtGui.QColor(128, 128, 128))

scene = QtWidgets.QGraphicsScene()

bit_depth = guess_bit_depth(max(signal))

factor = 150 / bit_depth * -2

widget.progressBar.setRange(0, len(signal))

ticks = 0

interval = int(framerate * 0.005) # 5 millisecond interval

for i in range(1, 11): # Draw horizontal lines for frequency ratio

scene.addLine(0, i * 15, len(signal), i*15, grey_pen)

scene.addLine(0, -i * 15, len(signal), -i*15, grey_pen)

if i % 2 == 0:

text = scene.addText(str(round(i * 0.1, 1)))

text.setDefaultTextColor(QtGui.QColor(128, 128, 128))

text.setPos(0, i * -15)

for i in range(len(signal)):

if i == ticks * interval:

text = scene.addText(str(round(ticks * 0.005, 3)))

text.setDefaultTextColor(QtGui.QColor(128, 128, 128))

text.setPos(i, 135)

# Add vertical lines for time value

scene.addLine(i, -150, i, 150, grey_pen)

ticks += 1

scene.addLine(i, 0, i, signal[i] * factor, blue_pen)

widget.progressBar.setValue(i)

widget.progressBar.setVisible(False)

widget.graphicsView.setScene(scene)

def open_audio_file():

file_path = RLPy.RUi.OpenFileDialog("Wave Files(*.wav)")

if file_path is not "":

widget.path.setText(file_path)

wav = wave.open(file_path, 'rb')

nchannels, sampwidth, framerate, nframes, comptype, compname = wav.getparams()

str_data = wav.readframes(nframes)

# Convert bytes to decimals

wav_data = np.fromstring(str_data, dtype=np.short)

if nchannels == 2:

# Stereo sounds are two mono tracks interleaved so we need to:

# Separate the signals into 2 channels for left & right as tuples (indefinite rows and 2 columns)

wav_data.shape = -1, 2

# Transpose the 2 arrays into a single array of left & right channels: 0[l][r], 1[l][r], 2[l][r]

wav_data = wav_data.T

# Put the highest frequency in wav_data[0], equivalent to merging stereo to mono

for i in range(len(wav_data[0])):

if wav_data[0][i] < wav_data[1][i]:

wav_data[0][i] = wav_data[1][i]

# Select only 1 channel, now with the highest frequencies

wav_data = wav_data[0]

plot_signal(framerate, wav_data)

widget.button.clicked.connect(open_audio_file)

window.Show()

APIs Used

You can research the following references for the APIs deployed in this code.