IC8 Python API:RLPy RIVisemeComponent

Contents

- 1 Description

- 2 Class Methods

- 2.1 RLPy.RIVisemeComponent.AddVisemeKey(self, kKey)

- 2.2 RLPy.RIVisemeComponent.AddVisemeOptionClip(self, kSmoothOption, kStartTick, strClipName)

- 2.3 RLPy.RIVisemeComponent.AddVisemesClip(self, kTick, strClipName, kClipLength)

- 2.4 RLPy.RIVisemeComponent.AddVisemesClipWithData(self, args)

- 2.5 RLPy.RIVisemeComponent.GetClip(self, uIndex)

- 2.6 RLPy.RIVisemeComponent.GetClipByTime(self, kHitTime)

- 2.7 RLPy.RIVisemeComponent.GetClipCount(self)

- 2.8 RLPy.RIVisemeComponent.GetStrength(self)

- 2.9 RLPy.RIVisemeComponent.GetVisemeBones(self)

- 2.10 RLPy.RIVisemeComponent.GetVisemeKey(self, kTime, kKey)

- 2.11 RLPy.RIVisemeComponent.GetVisemeKeys(self)

- 2.12 RLPy.RIVisemeComponent.GetVisemeMorphWeights(self)

- 2.13 RLPy.RIVisemeComponent.GetVisemeNames(self)

- 2.14 RLPy.RIVisemeComponent.GetWords(self, nClipIndex = -1)

- 2.15 RLPy.RIVisemeComponent.LoadVocal(self, args)

- 2.16 RLPy.RIVisemeComponent.RemoveVisemesClip(self, kTick)

- 2.17 RLPy.RIVisemeComponent.RemoveVisemesKey(self, kKey)

- 2.18 RLPy.RIVisemeComponent.TextToSpeech(self, args)

- 2.19 RLPy.RIVisemeComponent.TextToVisemeData(self, strContent, fVolume = 100., fPitch = 50., fSpeed = 50.)

- Main article: iC8 Modules.

- Last modified: 12/28/2022

Description

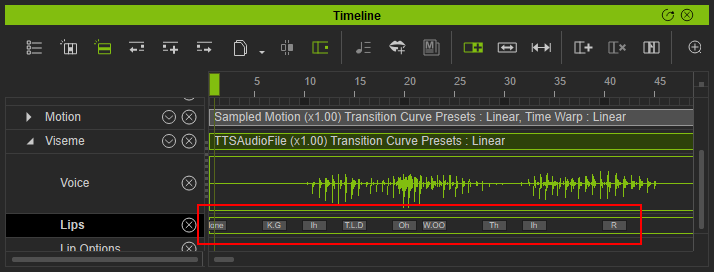

This class provides viseme related operations including reading an audio source and creating a viseme clip out of it. It can also add and remove keys from the viseme clip as well as provide Text-to-Speech capabilities. A viseme track within the timeline will show viseme clips and mouth shapes (viseme keys). The lip options track also includes the viseme smooth clip which can be used to smooth out the transition between the different mouth shapes in the event of a lip-sync animation.

Viseme keys in the "Lips" track:

Class Methods

RLPy.RIVisemeComponent.AddVisemeKey(self, kKey)

Add a viseme key.

Experimental API

Parameters

- kKey[IN] The key to be added - RLPy.RVisemeKey

Returns

Success or Fail or InvalidParameter - RLPy.RStatus

1 # add viseme key to viseme clip

2 expressiveness = 50

3 viseme_key = RLPy.RVisemeKey(RLPy.EVisemeID_AH, expressiveness)

4 viseme_key.SetTime(RLPy.RTime(100))

5 viseme_animator.AddVisemeKey(viseme_key)

RLPy.RIVisemeComponent.AddVisemeOptionClip(self, kSmoothOption, kStartTick, strClipName)

You can separately adjust the viseme animation strength/smooth for the tongue, lips, and jaw from this function.

Parameters

- kSmoothOption[IN] Settings of Smooth/Strength - RLPy.RVisemeSmoothOption

- kStartTick[IN] Specifies the start Tick of the clip - RLPy.RLTime

- strClipName[IN] The name of the clip - string

Returns

Success or Fail or InvalidParameter - RLPy.RStatus

1 audio_object = RLPy.RAudio.CreateAudioObject()

2 audio_object.Load("C:\\sample.wav")

3 clip_name = "VisemeClip"

4 clip_start_time = RLPy.RTime(0)

5 viseme_animator.LoadVocal(audio_object, clip_start_time, clip_name)

6 viseme_animator.AddVisemeOptionClip(viseme_smooth_option, clip_start_time, clip_name)

RLPy.RIVisemeComponent.AddVisemesClip(self, kTick, strClipName, kClipLength)

Add a viseme clip.

Experimental API

Parameters

- kTick[IN] Setting of clip's begin Tick - RLPy.RLTime

- strClipName[IN] Name of the Clip - string

- kClipLength[IN] Tick of clip length, the value must be greater than 0 - RLPy.RLTime

Returns

Success or Fail or InvalidParameter - RLPy.RStatus

1 clip_name = "VisemeClip"

2 time_begin = RLPy.RTime(0)

3 clip_length = RLPy.RTime(1000)

4 viseme_animator.AddVisemesClip(time_begin, clip_name, clip_length)

RLPy.RIVisemeComponent.AddVisemesClipWithData(self, args)

Add a viseme clip.

Experimental API

Parameters

- kTime[IN] Setting of clip's begin time - RLPy.RLTime

- strClipName[IN] Name of the Clip - string

- pAudio[IN] audio object - RLPy.RIAudioObject

- kKeys[IN] the list of viseme key - RLPy.RVisemeKey

- kWords[IN] the list of word data - RLPy.RWordData

- strText[IN] text of the clip - string

Returns

Success or Fail - RLPy.RStatus

1 # No example

RLPy.RIVisemeComponent.GetClip(self, uIndex)

Get facial clip.

Experimental API

Parameters

- uIndex[IN] Index of the clip - int

Returns

Pointer to the clip - RLPy.RIClip

1 # No example

RLPy.RIVisemeComponent.GetClipByTime(self, kHitTime)

Get facial clip.

Experimental API

Parameters

- kHitTime[IN] time during clip range - RLPy.RLTime

Returns

Pointer to the clip - RLPy.RIClip

1 # No example

RLPy.RIVisemeComponent.GetClipCount(self)

Get number of facial clips.

Experimental API

Returns

Number of facial clips - int

1 # No example

RLPy.RIVisemeComponent.GetStrength(self)

Get the strength of viseme.

Experimental API

Returns

The value of strength - float

1 # Get avatar viseme component

2 avatar_list = RLPy.RScene.GetAvatars()

3 avatar = avatar_list[0]

4 viseme_component = avatar.GetVisemeComponent()

5

6 strength = viseme_component.GetStrength()

RLPy.RIVisemeComponent.GetVisemeBones(self)

Get all viseme bones.

Experimental API

Returns

Pointer to viseme root bone - list

1 # Get avatar viseme component

2 avatar_list = RLPy.RScene.GetAvatars()

3 avatar = avatar_list[0]

4 viseme_component = avatar.GetVisemeComponent()

5

6 # Get viseme bones

7 viseme_bones = viseme_component.GetVisemeBones()

RLPy.RIVisemeComponent.GetVisemeKey(self, kTime, kKey)

Get viseme key information.

Experimental API

Parameters

- kTick[IN] Tick of the key.

kKey[OUT] viseme key - RLPy.RVisemeKey

Returns

Success or Fail or InvalidParameter - RLPy.RStatus

1 # Get avatar viseme component

2 avatar_list = RLPy.RScene.GetAvatars()

3 avatar = avatar_list[0]

4 viseme_component = avatar.GetVisemeComponent()

5

6 # Get viseme key

7 ret_key = RLPy.RVisemeKey()

8 result = viseme_component.GetVisemeKey(RLPy.RTime(0), ret_key)

RLPy.RIVisemeComponent.GetVisemeKeys(self)

Get Tick of all viseme keys.

Experimental API

Returns

Tick of the key - list

1 # Get avatar viseme component

2 avatar_list = RLPy.RScene.GetAvatars()

3 avatar = avatar_list[0]

4 viseme_component = avatar.GetVisemeComponent()

5

6 # Get viseme keys

7 viseme_key_list = viseme_component.GetVisemeKeys()

RLPy.RIVisemeComponent.GetVisemeMorphWeights(self)

Get all morph weights of viseme.

Experimental API

Returns

The value of morph weights - float

1 # Get avatar viseme component

2 avatar_list = RLPy.RScene.GetAvatars()

3 avatar = avatar_list[0]

4 viseme_component = avatar.GetVisemeComponent()

5

6 # Get viseme morph weights

7 viseme_weights = viseme_component .GetVisemeMorphWeights()

RLPy.RIVisemeComponent.GetVisemeNames(self)

Get name list of the visme control.

Experimental API

Returns

name list of the visme control - string

1 # No example

RLPy.RIVisemeComponent.GetWords(self, nClipIndex = -1)

Get word data (acculips).

Experimental API

Parameters

- nClipIndex[IN] the index of the clip, if the value is -1, it will return all words - int

Returns

word data - list

1 # No example

RLPy.RIVisemeComponent.LoadVocal(self, args)

Import voice source to generate Lips using AccuLips.

Parameters

- pAudio[IN] Input audio source - RLPy.RIAudioObject

- strText[IN] Corresponding text with input audio source. If input text is empty, AccuLip will generate it from audio automatically - string

- kStartTime[IN] Specifies the start time of the clip - RLPy.RLTime

- strClipName[IN] The name of the clip - string

Returns

- RLPy.RStatus.Success - Succeed

- RLPy.RStatus.Failure - Fail

1 # Get avatar viseme component

2 avatar_list = RLPy.RScene.GetAvatars()

3 avatar = avatar_list[0]

4 viseme_component = avatar.GetVisemeComponent()

5

6 # Load vocal with create audio object

7 audio = RLPy.RAudio.CreateAudioObject()

8 audio_path = "./SpecialDay.wav"

9 start_time = RLPy.RGlobal.GetTime()

10 RLPy.RAudio.LoadAudioToObject(avatar, audio_path, start_time)

11 clip_name = "Default"

12 result = viseme_component.LoadVocal(audio, start_time, clip_name)

13

14 # Load vocal with audio recorder

15 audio_recorder = RLPy.RAudioRecorder()

16 audio_recorder.Start()

17 audio_recorder.Stop()

18 audio_source = audio_recorder.GetAudio()

19 start_time = RLPy.RGlobal.GetTime()

20 clip_name = "Default"

21 result = viseme_component.LoadVocal(audio_source, start_time, clip_name)

RLPy.RIVisemeComponent.RemoveVisemesClip(self, kTick)

Remove viseme clip.

Experimental API

Parameters

- kTick[IN] The Tick for finding viseme clip - RLPy.RLTime

Returns

Success or Fail or InvalidParameter - RLPy.RStatus

1 # Get avatar viseme component

2 avatar_list = RLPy.RScene.GetAvatars()

3 avatar = avatar_list[0]

4 viseme_component = avatar.GetVisemeComponent()

5

6 # Remove a viseme clip at 1000 ms

7 result = viseme_component.RemoveVisemesClip(RLPy.RTime(1000))

RLPy.RIVisemeComponent.RemoveVisemesKey(self, kKey)

Remove viseme key.

Experimental API

Parameters

- kKey[IN] Specifies the time to remove key - RLPy.RVisemeKey

Returns

Success or Fail or InvalidParameter - RLPy.RStatus

1 # Get avatar viseme component

2 avatar_list = RLPy.RScene.GetAvatars()

3 avatar = avatar_list[0]

4 viseme_component = avatar.GetVisemeComponent()

5

6 # Remove visemes key

7 key = RLPy.RVisemeKey()

8 key.SetTime(RLPy.RTime(300))

9 key.SetID(RLPy.EVisemeID_AH)

10 key.SetWeight(80)

11 result = viseme_component.RemoveVisemesKey(key)

RLPy.RIVisemeComponent.TextToSpeech(self, args)

set speech from text.

Experimental API

Parameters

- strContent[IN] Content of speech - string

- eLanguage[IN] Language of speech system - RLPy.ELanguage

- RLPy.ELanguage_TW

- RLPy.ELanguage_US

- fVolume[IN] Settings of Volume, the range is from 0.0 to 100.0 - float

- fPitch[IN] Settings of Pitch, the range is from 0.0 to 100.0 - float

- fSpeed[IN] Settings of Speed, the range is from 0.0 to 100.0 - float

Returns

Success or Fail or InvalidParameter - RLPy.RStatus

1 # Get avatar viseme component

2 avatar_list = RLPy.RScene.GetAvatars()

3 avatar = avatar_list[0]

4 viseme_component = avatar.GetVisemeComponent()

5

6 result = viseme_component.TextToSpeech("speech", RLPy.ELanguage_US, 100, 50, 50)

RLPy.RIVisemeComponent.TextToVisemeData(self, strContent, fVolume = 100., fPitch = 50., fSpeed = 50.)

Get viseme data from text.

Experimental API

Parameters

- strContent[IN] Content of speech - string

- fVolume[IN] Settings of Volume, the range is from 0.0 to 100.0 - float

- fPitch[IN] Settings of Pitch, the range is from 0.0 to 100.0 - float

- fSpeed[IN] Settings of Speed, the range is from 0.0 to 100.0 - float

Returns

Success or Fail or InvalidParameter - int

1 # Get avatar viseme component

2 avatar_list = RLPy.RScene.GetAvatars()

3 avatar = avatar_list[0]

4 viseme_component = avatar.GetVisemeComponent()

5

6 result = viseme_component.TextToVisemeData("speech", 100, 50, 50)